Top 5 Python Web Scraping Libraries in 2025

Contents

In 2024, 149 zettabytes of data is created, captured, copied, and consumed worldwide. By 2028, this number is expected to skyrocket beyond 394 zettabytes! While managing, collecting, and analyzing such a massive amount of data will be a challenge, it also opens up exciting opportunities for innovation in data management and analysis solutions, including web scraping.

Web scraping allows businesses and organizations to gather large volumes of publicly available data from websites, such as product prices, news updates, social media trends, or public datasets. This data can then be fed into analytics pipelines to uncover insights on market trends, customer preferences, and competitive intelligence.

Python, one of the most popular programming languages for data analysis, offers a range of powerful libraries to make web scraping more efficient. In this article, we’ll look at the top 5 Python web scraping libraries for 2025, each with its own unique features to simplify and improve the data collection process.

5 Best Python Web Scraping Libraries in 2025

1. Selenium

Selenium may have started as a tool for automating web browsers for testing, but it has become a popular option for scraping dynamic websites. With Selenium, you can automate web browser actions and simulate user interactions like clicking buttons, filling out forms, and navigating web pages through code. This makes it a solid choice for scraping JavaScript-heavy sites.

Why use Selenium?

- It can handle dynamic websites where content is loaded after the initial page load.

- It allows full control over the browser, mimicking real-user actions.

- It supports multiple browsers, such as Chrome, Firefox, and Safari.

When should you use Selenium?

Selenium is perfect for websites that need user interactions, such as scrolling, logging in, or submitting search queries. If you’re scraping an e-commerce site that loads products as you scroll or a news site that shows the content after interacting with the page, Selenium will do the job.

Example

Let’s say you need to scrape data from a website where clicking a button loads more results. The code below shows you how to interact with the page by clicking the button to reveal more content:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

import time

driver = webdriver.Chrome() # Make sure ChromeDriver is installed

driver.get("https://example.com")

load_more_button = driver.find_element(By.ID, "load-more-btn")

load_more_button.click()

# Wait for new content to load

time.sleep(3)

items = driver.find_elements(By.CSS_SELECTOR, ".item-class")

for item in items:

print(item.text)

driver.quit()

2. Requests

For static websites where the data is already available in HTML, the Requests library is one of the simplest and fastest tools to use. This lightweight HTTP library allows you to quickly fetch page content using GET requests and extract the data you need from the response, without loading unnecessary resources like JavaScript.

Why use Requests?

- It's fast and lightweight.

- Ideal for sites where content is directly available in the HTML.

- Easily integrates with other libraries like Beautiful Soup for parsing HTML.

When should you use Requests?

Requests is perfect when you’re scraping websites that have a stable and predictable structure, where little to no interaction with the page is needed, and don't rely heavily on JavaScript for loading dynamic content.

Example

The example below fetched the content of a URL and prints the HTML content directly:

import requests

response = requests.get("https://www.example.com")

if response.status_code == 200:

print(response.text)

else:

print("Failed to retrieve the page.")

3. Beautiful Soup

Beautiful Soup is a popular Python library for parsing HTML and XML files. It transforms complex HTML/XML documents into a Python object tree and provides simple methods for navigating, searching, and modifying the tree. This makes it easy to navigate the structure of web pages and extract the data that you need. However, since it’s a parser, you’ll need to pair it with an HTTP library like Requests to scrape data effectively.

Why use Beautiful Soup?

- It simplifies the process of parsing and navigating HTML documents.

- Works seamlessly with Requests to make web scraping even easier.

- It’s great for cleaning up messy HTML and extracting data from complex structures.

When should you use Beautiful Soup?

Use Beautiful Soup if you’re scraping static websites that don’t rely on JavaScript to display content, but have a complex HTML structure. It helps you search for specific tags, classes, IDs, and other elements, making extracting the data you need from complicate HTML documents easier.

Example

The example below scrapes data from a webpage that contains a list of products, each with a name, price, and description using their class names:

import requests

from bs4 import BeautifulSoup

url = 'https://example.com/products'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Find all product containers (assuming each product is within a <div class="product-item">)

products = soup.find_all('div', class_='product-item')

# Loop through each product and extract relevant information

for product in products:

# Extract the product name (assuming it is within an <h2 class="product-name">)

product_name = product.find('h2', class_='product-name').text.strip()

# Extract the product price (assuming it is within a <span class="product-price">)

product_price = product.find('span', class_='product-price').text.strip()

# Extract the product description (assuming it is within a <p class="product-description">)

product_description = product.find('p', class_='product-description').text.strip()

# Print the extracted data

print(f'Product Name: {product_name}')

print(f'Price: {product_price}')

print(f'Description: {product_description}')

print('----')

4. Playwright

Playwright is a relatively new tool in the web scraping scene. Similar to Selenium, it automates web browsers and supports not only desktop versions of Chrome, Firefox, Safari, and Microsoft Edge but also their mobile versions. Built to handle modern web technologies, it’s one of the best Python libraries for automating web browsers to scrape data from websites.

Why use Playwright?

- It's faster and more efficient than Selenium, especially for modern websites.

- Supports headless browsing, which makes it more resource-friendly.

- Handles dynamic JavaScript content with ease.

When should you use Playwright?

Playwright is highly effective for dynamic content and modern web applications, and supports concurrent tasks. This makes it an excellent choice for scraping JavaScript-heavy websites or those with larger datasets, such as social media platforms, online booking systems, or streaming services.

Example

Here’s an example of using the Playwright Python library to scrape available booking slots from an online booking system:

from playwright.sync_api import sync_playwright

def scrape_booking_slots():

with sync_playwright() as p:

browser = p.chromium.launch(headless=True)

context = browser.new_context()

page = context.new_page()

booking_url = "https://example.com/booking"

page.goto(booking_url)

# Wait for the target element to load

page.wait_for_selector(".booking-slots")

# Scrape the available slots

slots = page.query_selector_all(".slot-item")

available_slots = []

for slot in slots:

time = slot.query_selector(".slot-time").inner_text()

status = slot.query_selector(".slot-status").inner_text()

if status.lower() == "available": # Filter for available slots

available_slots.append(time)

# Print the results

print("Available Booking Slots:")

for slot in available_slots:

print(f"- {slot}")

browser.close()

scrape_booking_slots()

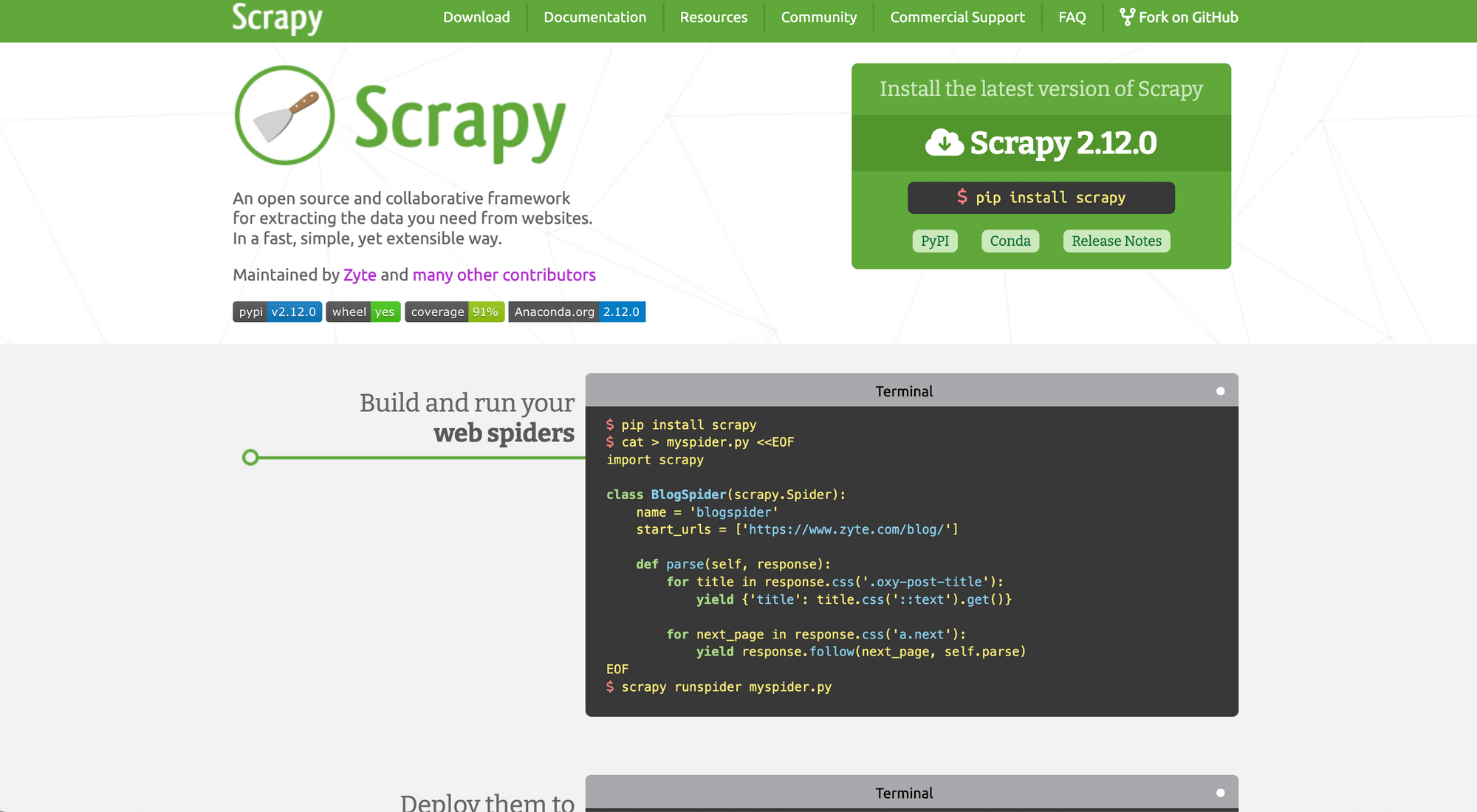

5. Scrapy

Scrapy is an open-source, all-in-one framework for web crawling and scraping. Unlike other libraries, it manages requests, parsing, and data storage seamlessly in one package. It’s also highly extensible, which means you can add new features without modifying its core.

Why use Scrapy?

- It’s built for large, complex scraping projects.

- Asynchronous scraping for higher performance.

- Provides built-in tools for managing requests and storing data.

When should you use Scrapy?

Scrapy is perfect for large-scale projects because of its efficient asynchronous scraping capabilities. It also allows you to save data in various formats like XML, CSV, and JSON. It also works with scheduling well, making it ideal for tasks like regularly crawling a news website to gather the latest articles.

Example

The code below shows an example of scraping all products from an e-commerce site:

import scrapy

class ProductSpider(scrapy.Spider):

name = "products"

start_urls = ["https://example.com/products"]

def parse(self, response):

for product in response.css(".product"):

yield {

"name": product.css(".product-name::text").get(),

"price": product.css(".product-price::text").get(),

}

Using APIs as an Alternative: Roborabbit API

If you want to skip the hassle of coding a web scraper from scratch, Roborabbit provides a simpler, more convenient solution. It’s a scalable, cloud-based, no-code web scraping tool that lets you extract data from websites without writing a single line of code. That said, it also offers an API for integration, making it a flexible choice for developers who want to add web scraping capabilities to their applications.

Here’s an example of a Roborabbit task that extracts data from multiple web pages:

And here’s the data scraped from the task above:

[

{

"link": "https://www.roborabbit.com/blog/the-ultimate-guide-to-web-scraping-for-beginners-10-tips-for-efficient-data-extraction/",

"author": "Josephine Loo",

"date": "September 2024",

"description": "In this article, we'll cover the basics of web scraping and share tips to help you improve your data extraction process. No matter if you're just starting or looking to improve your web scraping skills, you'll find practical tips to make your web scraping more efficient.",

"title": "The Ultimate Guide to Web Scraping for Beginners: 10 Tips for Efficient Data Extraction"

},

{

"link": "https://www.roborabbit.com/blog/our-guide-to-roborabbit-element-interaction/",

"author": "Julianne Youngberg",

"date": "August 2024",

"description": "Roborabbit gives you the tools to automate a wide range of user interactions. By understanding how to effectively use these actions, you can create efficient, reusable automations that mimic human behavior and produce consistent, high-quality results.",

"title": "Our Guide to Roborabbit Element Interaction"

},

{

"link": "https://www.roborabbit.com/blog/how-to-use-puppeteer-in-aws-lambda-for-web-scraping-via-serverless/",

"author": "Josephine Loo",

"date": "August 2024",

"description": "In this article, we’ll explore how AWS Lambda’s serverless architecture can simplify the deployment of your Puppeteer tasks and walk you through the process of setting up Puppeteer in AWS Lambda using the Serverless framework.",

"title": "How to Use Puppeteer in AWS Lambda for Web Scraping via Serverless"

},

{

"link": "https://www.roborabbit.com/blog/how-to-test-search-bar-functionality-without-code/",

"author": "Julianne Youngberg",

"date": "August 2024",

"description": "By leveraging the power of nocode automation tools like Roborabbit, website owners can efficiently validate functionality, identify issues, and continually optimize the search experience for visitors. Here are our tips for getting started.",

"title": "How to Test Search Bar Functionality Without Code"

},

{

"link": "https://www.roborabbit.com/blog/how-to-scrape-lists-of-urls-from-a-web-page-using-roborabbit/",

"author": "Josephine Loo",

"date": "July 2024",

"description": "Discover how to efficiently scrape URLs from websites for analyzing a site's linking structure, uncovering potential backlink opportunities, validating links, etc. using Roborabbit in this article.",

"title": "How to Scrape Lists of URLs from a Web Page Using Roborabbit"

},

{

"link": "https://www.roborabbit.com/blog/how-to-automatically-save-images-with-roborabbit/",

"author": "Julianne Youngberg",

"date": "July 2024",

"description": "Automatic image extraction can supercharge a variety of use cases. With Roborabbit's browser automation capabilities, you have several options to quickly and efficiently save the images you need.",

"title": "How to Automatically Save Images with Roborabbit"

},

{

"link": "https://www.roborabbit.com/blog/how-to-use-a-proxy-in-curl/",

"author": "Josephine Loo",

"date": "July 2024",

"description": "cURL, known for its simplicity and versatility, is a preferred tool for API testing, web automation, and web scraping. In this article, we’ll learn how to use cURL with a proxy, to facilitate tasks that require enhanced privacy and security, bypass geo-restrictions, or route requests.",

"title": "How to Use a Proxy in cURL"

},

{

"link": "https://www.roborabbit.com/blog/how-to-debug-your-roborabbit-tasks-a-nocode-guide/",

"author": "Julianne Youngberg",

"date": "July 2024",

"description": "Debugging automated browser tasks can be a challenge, and knowing where to start troubleshooting can be a lifesaver. This guide will show you where to start when a Roborabbit task goes wrong.",

"title": "How to Debug Your Roborabbit Tasks (A Nocode Guide)"

},

{

"link": "https://www.roborabbit.com/blog/how-to-make-a-discord-bot-to-send-price-change-alerts-automatically/",

"author": "Josephine Loo",

"date": "July 2024",

"description": "In Discord communities, bots like Carl-bot, MEE6, Dyno, and Pancake are essential for improving server functionality and engaging users. Did you know you can also make your own Discord bot tailored to your specified needs? Let’s learn how in this article!",

"title": "How to Make a Discord Bot to Send Price Change Alerts Automatically"

}

]

To use this web scraping task in Python, simply send an HTTP request to Roborabbit’s API with the correct API key and task ID. The scraped data will then be returned in the response.

🐰 Hare Hint: For more details, check out the API reference and guide on Web Scraping without a Library (Roborabbit API).

Final Thoughts

When choosing a web scraping library, consider the complexity of the website, your project's scale, and your familiarity with the tools. To make it easier for you, here’s a quick comparison of the top libraries covered in this article:

| Library | Best For | Strengths | Limitations |

|---|---|---|---|

| Selenium | Dynamic websites with user interactions | - Simulates real user actions | |

| - Handles JavaScript-heavy content | - Slower than other tools - Higher resource usage | ||

| Requests | Simple, static websites | - Fast and lightweight | |

| - Easy integration with parsers like Beautiful Soup | - Cannot handle dynamic content | ||

| Beautiful Soup | Parsing and extracting data from complex HTML | - Simplifies HTML parsing | |

| - Cleans messy HTML structures | - Requires an HTTP library like Requests | ||

| Playwright | Modern, JavaScript-heavy websites | - Faster and more efficient than Selenium | |

| - Supports headless browsing | - Steeper learning curve for beginners | ||

| Scrapy | Large-scale scraping projects | - Asynchronous scraping | |

| - Integrated tools for requests and data storage | - Overkill for small, simple tasks | ||

| Roborabbit API | No-code, quick web scraping solutions | - No coding required | |

| - Scalable and easy to use | - Less control compared to coding your scraper |

For static websites, Requests and Beautiful Soup will serve you well. If you’re dealing with dynamic, JavaScript-heavy websites, Selenium or Playwright are more suitable. For large-scale projects, Scrapy is your best bet, while APIs like Roborabbit provide a straightforward, no-code solution if you prefer not to build a scraper yourself.

At the end of the day, the best tool depends on your specific needs. Don’t hesitate to try out a few of these libraries to find the one that works best for your scraping needs!